When Apple launched its credit card in August 2019, it only took a few months for someone to accuse it of offering smaller lines of credit to women.

Algorithmic bias has been a huge issue in the tech industry — whether it’s Apple’s credit card or biases in visual databases used to train artificial intelligence systems around the world. But at the heart of the issue is digital safety. How are tech organizations working safety into the products they design?

The answer is that it’s complicated because even though the tech industry is maturing, it’s still early in the evolution of the commercial web. Organizations are still struggling to determine how they use web and internet-based technologies, and how to do so in a safe and profitable way.

“We don't even know what digital is. We don't know who the people are, who are supposed to run it, really. And of the groups of people that think they're all running, it, they're all fighting with each other. Content people are saying, ‘It’s all us.’ UX people are saying, ‘It's all us.’ Technology people are saying, ‘It's all us.’ The reality is it's everybody,” said Lisa Welchman, a digital governance expert, who wrote Managing Chaos: Digital Governance by Design.

Welchman and design expert Andy Vitale will join Fluxible co-chair Meena Kothandaraman for a fireside chat about designing for digital safety at Fluxible 2021. (Early bird tickets for Fluxible 2021 are on sale now.)

Welchman and Vitale have been collaborating since they both met at a different conference where they were each speaking about their areas of expertise — Welchman about governance and Vitale about responsible design. Right away, they saw how their talks overlapped. Vitale said that’s when they started wondering how to bridge the gap so that people realize how important it is to have both design and governance align when building digital products. They decided to write a book on digital safety, but realized there were too many issues that weren’t clear. How do you define the digital space? How do you define safety in the digital space? So they started a podcast that brings together their two disciplines and looks at the intersection of design and governance.

People want to feel safe when they’re interacting online, said Welchman.

“They want their kids to feel safe. They want their loved ones to feel safe. They want to feel safe and not exploited online. And we’re just trying to explore, ‘What do we have to do to get that experience online?’ Because right now that is not what we've got,” said Welchman.

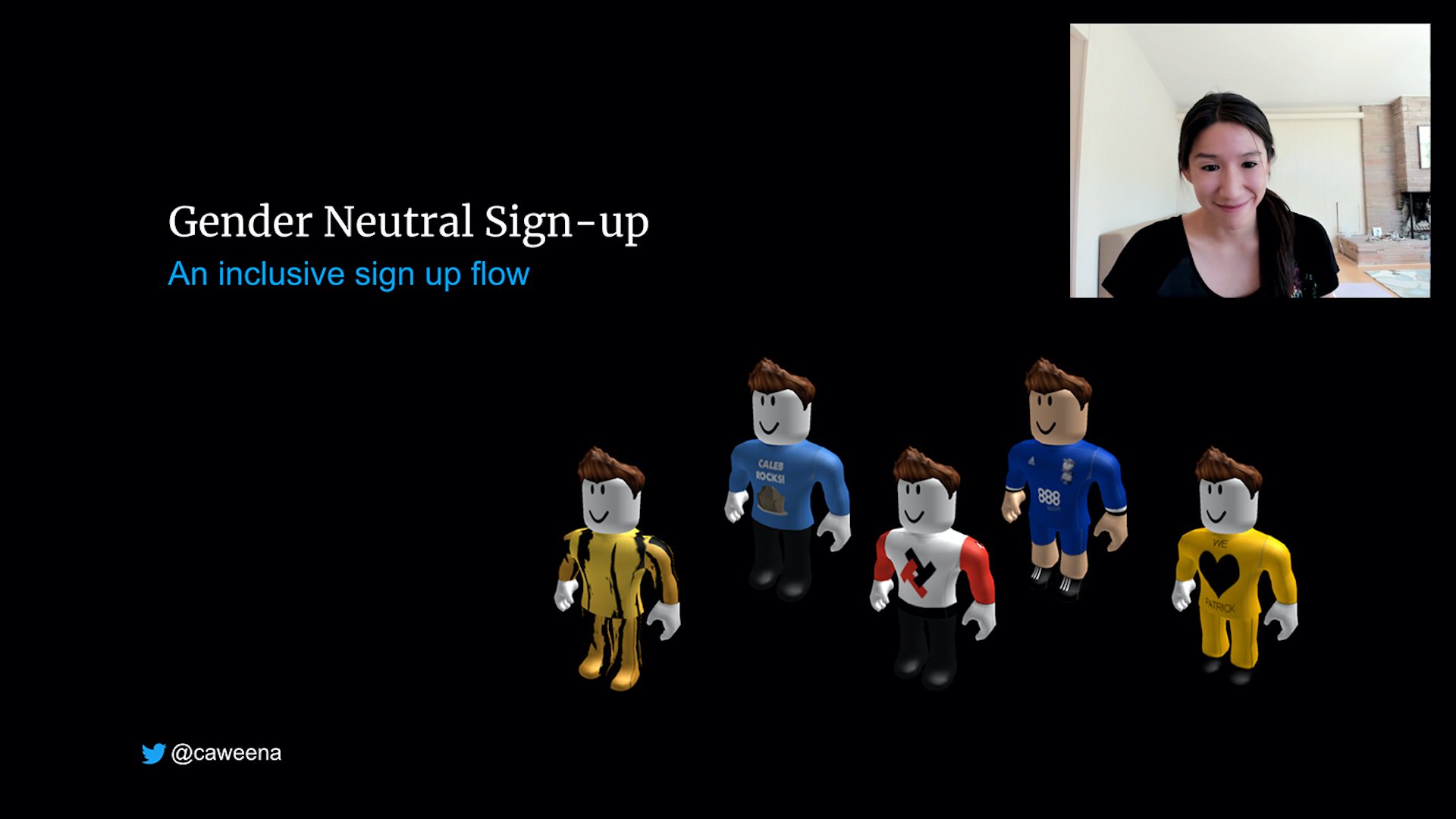

(What to read next: Designing a gaming platform for kids.)

If they were going to write a book about designing safe digital products and talk about it at conferences such as Fluxible, Vitale and Welchman decided they needed to define it. So that’s what they did. They worked out a definition that was broad enough that any organization could embrace it, but still be inclusive. Their definition?

“Safe products are designed to minimize emotional, economic, physical, and psychological harm to any human being and to society,” said Vitale.

The definition is not meant to be a one-size-fits-all, said Welchman, but includes some fundamental principles, while still offering flexibility for organizations to tune it for themselves. The key is for organizations to think about what digital means in their organization through a safety lens, she said. But too often Welchman has seen organizations not think through what digital means.

When she’s working on governance projects, Welchman said she tries to create collaboration models that will set the stage for shared goals and lead to intentional outcomes. One of the first things she does is ask people and organizations she’s working with to be clear about what is within the scope of the term “digital” in their organization. Many can’t, or they think it’s just their marketing websites. But Welchman pushes them to broaden their views. Do they have an employee collaboration space, she asks? Mobile channels? Social media channels? If she’s working with an automotive company, she points out they have the internet embedded into their hard goods, which is also part of their digital space.

“I think that because we're 30 years in, people really need to just slow down for a second and say, ‘OK, what is digital in our organization?’ And then look at that pile of stuff through a lens of safety. Are we actually being inclusive? Are we causing any psychological harm causing emotional harm? Do people feel safe around our products and services? And I would say most organizations probably have work to do on one of those,” said Welchman.

How to build a product with safety in mind

Building a product with safety in mind happens at each phase of the product development lifecycle, said Vitale. In the researching phase, it’s about creating safety personas and thinking about what could go wrong.

“As you think about people when you're trying to build these products, just understanding who's involved, who's not involved, understanding what safety means to them, making sure that you have different levels of safety built into those personas,” said Vitale.

And have policies in place as you scale, he suggested, so that when something goes wrong, you know which levers to pull so you can roll back decisions and react.

“You can't have something be 100 per cent safe,” said Vitale. “There's always the risk of harm. When that harm comes, what do you do? Being prepared for that is what separates good companies that handle it well from others.”

Beyond baking safety into every phase of product development, organizations also need to create conditions so that people feel comfortable raising concerns, said Welchman. Management and executives set the tone for that, she pointed out.

“You have to make it OK for Pamela to put her hand up and say, ‘You know, somebody gave me a task to code this stuff and this isn't going to fly’ and not lose her job or not be otherwise penalized,” said Welchman. “And I think most of the harm, the big harm that we see online, comes because employees are not in an environment where they feel safe raising safety concerns.”

Designing for safety in an agile environment

In some organizations — whether they’re building a product or service — agile frameworks that thrive on the concept of building fast and breaking things timeline. But can agile and safety live together in the digital space?

“The ‘move fast and break things’ is what got us to where we are,” said Vitale. “Like we move stupid fast for the sake of just moving fast and that causes a lot of errors. Agile gives us this idea that we're going to go back and fix it but nine times out of 10 we don't go back and fix anything unless it affects the bottom line.”

It’s costly and hard to go back and fix something after you already broke it, Vitale pointed out. And when it comes to safety, once you erode that trust, it’s almost impossible to get it back, he added.

The premise of moving fast and breaking things stems from 90s and dot com culture where spaces were being disrupted in a way that people couldn’t understand and was scary, said Welchman. Bookstores were closing, newspapers were going under, and people began reacting quickly because they felt like they had to do something.

“Unfortunately that dynamic of moving fast and reacting because the world's falling apart and everything's crazy, got embedded into the culture of digital makers,” said Welchman. “So digital makers think that's just how you work. And it's like, that's not how you actually do good work.”

The key, they both agreed, is to organize teams to work on measurable chunks of work, test and learn from them, and put safety levers into that process from the beginning before increasing their velocity.

As immature as the tech industry is, it still feels late to have a conversation around safety and harm, said Vitale. Whenever another story comes out about algorithmic bias, he scratches his head because he knows it could have been avoided. The industry isn’t as evolved as it thinks it is.

“We’re making a lot of mistakes and we’ve been the cause of a lot of these mistakes,” he said.

But he and Welchman are pressing on, raising awareness about why people need to think about safety around their digital products early and often.

“If we can raise any awareness to what could go wrong, what has gone wrong, how we can make sure it doesn't happen again, how we can involve the right people in the process of building, how we can make sure that there are decision‑makers around and policies in place before it gets too late. Like if one person decides they're going to change the way they do things, it's been worth it,” he said.